Programming/Kdb/History

Introduction

Kdb+ is a database built on top of an interpreter for a programming language, q, itself built on top of another programming language, k. Kdb+, q, and k were created by the Canadian computer scientist Arthur Whitney with the aim of addressing the inability of traditional relational databases to keep up with increasing volumes of data and speed requirements.

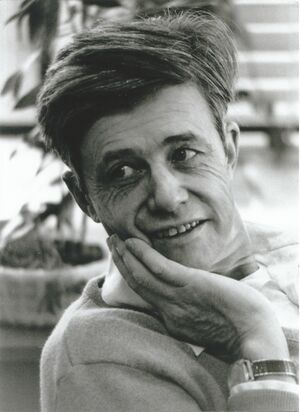

K and q differ in many respects from other programming languages, such as C++, Java, C#, and Python. Whereas these other programming languages are intellectual descendants (for the most part) of C and Simula, k and q are derived from another programming language, APL, designed by the Canadian computer scientist Kenneth (Ken) Eugene Iverson (1920–2004).

Kenneth Iverson

Kenneth Iverson was born on 17 December 1920 near Camrose, a town in central Alberta, Canada. His parents were farmers who came to Alberta from North Dakota; his ancestors came from Trondheim, Norway.

Iverson's friend and colleague Roger Kwok Wah Hui (b. 1953) recalls:

Ken dropped out of school after Grade 9 because it was the height of the Depression and there was work to do on the family farm, and because he thought further schooling only led to becoming a schoolteacher and he had no desire to become one.

During World War II, Ken first served in the Canadian army and then in the R.C.A.F. as a flight engineer in PBY Catalina flying patrol boats. He finally learnt about universities from his Air Force mates, many of whom planned to return to university, thanks to government support for servicemen. While in the service he completed high school courses by correspondence. After the war he graduated summa cum laude with a B.A. from Queen's University and the M.A. and Ph.D. degrees from Harvard. His doctoral dissertation, "Machine Solutions of Linear Differential Equations: Applications to a Dynamic Economic Model" (1954), was jointly supervised by Professors Howard Aiken and Wassily Leontief.

Harvard

I. Bernard Cohen, in his book Howard Aiken: Portrait of a Computer Pioneer, describes Iverson's recollections of his PhD studies:

Kenneth Iverson has recalled graduate study under Aiken as "like an apprenticeship" in which the student "learned the tools of the scholarship trade". Every topic was "used more as a focus for the development of skills such as clarity of thought and expression than as an end in itself." Once admitted to the program, a graduate student underwent a rite of "adoption into the fold". He was given a desk (or a share of a desk) among a group of other graduate students, the permanent staff, or visiting scholars, "most of whom were engaged in some aspect of the design and building of computers". A student was thus "made to feel part of a scholarly enterprise" and was provided, "often for the first time, with easy and intimate access to others more experienced in his chosen field".

Ibid.:

Aiken was especially concerned with clarity of exposition. "Every thesis student," Iverson recalls, "knew and dreaded the fact that Aiken would carefully read and discuss successive chapters of the thesis, insisting upon clear exposition and offering both general and detailed advice." One effect of Aiken's emphasis on writing style was that "budding authors soon learned to call upon their fellow graduate students and other colleagues to review their work." Once a student had a chapter analyzed by Aiken, he would never submit another without the revision necessary to put it into the best form possible.

Iverson served as Assistant Professor of Applied Mathematics at Harvard from 1955 to 1960. He implemented the world's first graduate programme in "automatic data processing":

Many people think that Aiken was interested only in scientific computers. This was simply not so. During one coffee hour, Aiken turned to Ken Iverson, who had just finished his Ph.D., and said: "These machines are going to be immensely important for business, and I want you to prepare and teach a course in business data processing next fall." There had never been such a course anywhere in the world. Ken was qualified only because he was a mathematician. I was so excited by the prospect that I immediately volunteered to be Ken's graduate teaching assistant.

—Frederick Brooks Jr., Aiken and the Harvard "Comp Lab", in I. Bernard Cohen and Gregory W. Welch, editors, Making' Numbers, MIT Press, 1999, page 141.

It was in this period that Iverson developed notation for describing and analyzing various topics in data processing, for teaching classes, and for writing (with Brooks) Automatic Data Processing.

I was appalled to find that the mathematical notation on which I had been raised failed to fill the needs of the courses I was assigned, and I began work on extensions to notation that might serve. In particular, I adopted the matrix algebra used in my thesis work, the systematic use of matrices and higher-dimensional arrays (almost) learned in a course in Tensor Analysis rashly taken in my third year at Queen's, and (eventually) the notion of Operators in the sense introduced by Heaviside in his treatment of Maxwell's equations.

—Kenneth E. Iverson's Autobiography (with Donald B. McIntyre).

Ibid. Iverson explains how he ended up in the Research Division of the International Business Machines Corp. in 1960:

Harvard had a benign rule of five years to tenure or out, benign because it prevented fierce Bostonophiles from clinging forever in the hope of eventual tenure. It was particularly benign in those postwar days of the establishment of commercial research centers, where anyone from the science faculty of a respectable university could expect to double his salary—as I did when I joined the Research Division of IBM.

—Kenneth E. Iverson's Autobiography (with Donald B. McIntyre).

Under construction.

The acronym "APL" stands for "A Programming Language", the name of a book (Iverson, 1962) written by the Canadian computer scientist Kenneth (Ken) Eugene Iverson (1920–2004). Iverson worked on automatic data processing during his years at Harvard (1955–1960), when he found that the conventional mathematical notation wasn't well suited to the task. He proceeded to develop his own notation, borrowing ideas from linear algebra, tensor analysis, and operators à la Oliver Heaviside. This notation was further elaborated at IBM, where Iverson worked alongside Adin Falkoff (1921–2010) from 1960 until 1980. The collaboration between Iverson and Falkoff would span nearly two decades.

The two main ideas behind APL are the efficient-notation idea (Montalbano, 1982) and the stored-program idea. The stored-program idea, which dates back to John von Neumann (1903–1957), see von Neumann (1945), amounts to being able to store (and process) code as data, has been taken a step further in languages such as q, where function names evaluate to their source code. The efficient-notation idea, the idea that developing a concise and expressive syntax is critical to solving complex iterative problems correctly and efficiently, was pioneered by Iverson. In the preface of Iverson (1962) he defines a programming language in the following terms:

Applied mathematics is largely concerned with the design and analysis of explicit procedures for calculating the exact or approximate values of various functions. Such explicit procedures are called algorithms or programs. Because an effective notation for the description of programs exhibits considerable syntactic structure, it is called a programming language.

Later, in 1979, Iverson would give a Turing Award Lecture with the title Notation as a Tool of Thought (Iverson, 1979). Iverson's notation was effective as it was simple. It relied on simple rules of precedence based on right-to-left evaluation. The fundamental data structure in APL is a multidimensional array. Languages such as APL and its progenitors are sometimes referred to as array, vector, or multidimensional programming languages because they implicitly generalize scalar operations to higher-dimensional objects.

Iverson's notation was known as "Iverson's notation" within IBM until the name "APL" was suggested by Falkoff. After the publication of A Programming Language in 1962, the notation was used to describe the IBM System/360 computer.

Iverson and Falkoff then focused on the implementation of the programming language. An implementation on System/360 was made available at IBM in 1966 and released to the outside world in 1968.

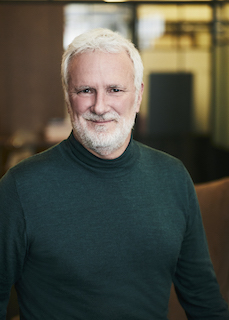

In 1980, Iverson moved to I. P. Sharp Associates (IPSA), a Canadian software firm based in Calgary. There he was joined by Roger Kwok Wah Hui (b. 1953) and Arthur Whitney (b. 1957). The three of them continued to work on APL, adding new ideas to the programming language. Hui, whose family emigrated to Canada from Hong Kong in 1966, was first exposed to APL at the University of Alberta. Whitney had a background in pure mathematics and had worked with APL at the University of Toronto and Stanford. He met Iverson for the first time well before he joined IPSA, at the age of 11, in 1969. Iverson had been his father's friend at Harvard. Whitney's family then lived in Alberta but would visit Iverson in his house in Mount Kisco. There Iverson introduced Whitney to programming and APL.

In 1988, Whitney joined Morgan Stanley, where he helped develop A+, an APL-like programming language with a smaller set of primitive functions optimized for fast processing of large volumes of time series data. Unlike APL, A+ allowed functions to have up to nine formal parameters, used semicolons to separate statements (so a single statement could be split into multiple lines), used the last statement of a function as its result, and introduced the so-called dependencies which functioned as global variables. The programming language is now available online, http://www.aplusdev.org/. Programmers can also download the kapl font, which includes the special characters used by APL and A+.

One summer weekend in 1989, Whitney visited Iverson at Kiln Farm and produced—"on one page and in one afternoon" (Hui (1992))—an interpreter fragment on the AT&T 3B1 computer. Hui studied this fragment and on its basis developed an interpreter for another APL variant, J. Unlike APL and A+, J used the ASCII character set. It included advanced features, such as support for parallel MIMD operations. Whitney's original fragment appears under the name Incunabulum in an appendix in Hui's book, see Hui (1992). Other ideas by Whitney found their way into J: orienting primitives on the leading axis, using prefix rather than suffix for agreement, and total array ordering (Hui, 2006, 2005). Ken Iverson, his son, Eric Iverson, and Hui all ended up working in a company called Jsoftware in the 1990s–2000s.

Whitney left Morgan Stanley in 1993 and co-founded Kx Systems with Janet Lustgarten, where he developed another APL variant, called k. On its basis he developed a columnar in-memory time series database called kdb. Kx Systems was under an exclusive agreement with UBS. It expired in 1996 and k and kdb became generally available. ksql was added in 1998 as a layer on top of k. Some developers regard it as part of the k language, ksql includes SQL-like constructs, such as select.

kdb+ was released in June 2003. This was more or less a total rewrite of kdb for 64-bit systems based on the 4th version of k and q, a macro language layer (or a query language, hence the name) on top of k, defined in terms of k. Both q and k compile to the same byte code that is executed in the kdb+ byte interpreter. For example, type in q is the equivalent of @: in k. Q is much more readable than k and most kdb+ developers write their code in q, not k.

The q programming language contains its own table query syntax called q-sql, which in some ways resembles the traditional SQL.

APL

He then joined International Business Machines Corp. and in 1970 was named an IBM Fellow in honour of his contribution to the development of APL.

Applied mathematics is largely concerned with the design and analysis of explicit procedures for calculating the exact or approximate values of various functions. Such explicit procedures are called algorithms or programs. Because an effective notation for the description of programs exhibits considerable syntactic structure, it is called a programming language.

In 1979, Iverson received the Turing Award for his work on APL.

The importance of nomenclature, notation, and language as tools of thought has long been recognized. In chemistry and in botany, for example, the establishment of systems of nomenclature by Lavoisier and Linnaeus did much to stimulate and to channel later investigation. Concerning language, George Boole in his Laws of Thought asserted "That language is an instrument of human reason, and not merely a medium for the expression of thought, is a truth generally admitted."

Mathematical notation provides perhaps the best-known and best-developed example of language used consciously as a tool of thought. Recognition of the important role of notation in mathematics is clear from the quotations from mathematicians given in Cajori's A History of Mathematical Notations. They are well worth reading in full, but the following excerpts suggest the tone:

By relieving the brain of all unnecessary work, a good notation sets it free to concentrate on more advanced problems, an in effect increases the mental power of the race.

A. N. Whitehead

The quantity of meaning compressed into small space by algebraic signs, is another circumstance that facilitates the reasonings we are accutomed to carry on by their aid.

Charles Babbage

Nevertheless, mathematical notation has serious deficiencies. In particular, it lacks universality, and must be interpreted differently according to the topic, according to the author, and even according to the immediate context. Programming languages, because they were designed for the purpose of directing computers, offer important advantages as tools of thought. Not only are they universal (general-purpose), but they are also executable and unambiguous. Executability makes it possible to use computers to perform extensive experiments on ideas expressed in a programming language, and the lack of ambiguity makes possible precise thought experiments. In other respects, however, most programming languages are decidedly inferior to mathematical notation and are little used as tools of thought in ways that would be considered significant by, say, an applied mathematician.

The thesis of the present paper is that the advantages of executability and universality found in programming languages can be effectively combined, in a single coherent language, with the advantages offered by mathematical notation.

H. G. Wells described human history as a race between education and catastrophe. This observation is more pertinent today than it ever has been in the past. But, in the specific case of the proliferation of stored-program digital computers, the education-catastrophe race, in my opinion, takes a particular form: it is a race between technology and methodology, between gadgets and ideas.

We are developing gadgets at an explosive, accelerating, self-fueling rate. We shall be swamped by these gadgets if we don't hasten to develop and apply the ideas we need to control them.

To me, this need defines the importance and the mission of APL. APL provides the best set of gadget-understanding and gadget-controlling ideas currently available. Looking to the future, it provides the best base for the methodology we must develop if we are ever to bring our gadget-based technology under control.

This opinion is based on experience. I was working with computers when they were merely gleams in the eyes of the early designers: I was working with APL when it was nothing but a collection of incomprehensible characters scattered through publications with catchy titles like: The Description of Finite Sequential Processes. In other words, I've been working with both computers and APL since their very early days. And, in looking back on this experience of just over 34 years, (which is my own personal experience of computing), I find that it can best be summarized in terms of two key ideas:

- The stored-program idea: the idea that a procedure or algorithm can be stored as a collection of switch settings in exactly the same way as the data on which the procedure is to work and, as a consequence, that executing such a stored procedure consists of starting it and letting it flip switches until it is done. This was apparently first stated explicitly in a paper drafted by John von Neumann in 1945.

- The efficient-notation idea: the idea that these vast collections of switches, changing their settings in thousandths, millionths, billionths, trillionths, ... of a second, would be pretty hard to manage if we didn't develop a good way to describe them and think about them. I don't know whether this idea was ever presented anywhere in just these terms. Instead, it seemed to be implicit in the activities and writings of many people. But, in my opinion, it received its most effective and fruitful expression in the writings of Kenneth Iverson and others who followed his lead. The most important publication expounding this idea is the book from whose title the letters APL derive their significance.

The stored-program idea provided, and continues to provide, the basis for our current runaway technology. The efficient-notation idea, if we take it seriously and do a lot of thinking and hard work, will help us curb the runaway and direct it into fruitful and productive channels.

J

In one of the essays on the J website Roger Hui tells the story of the J programming language.

One summer weekend in 1989, Arthur Whitney visited Ken Iverson at Kiln Farm and produced—on one page and in one afternoon—an interpreter fragment on the AT&T 3B1 computer. I studied this interpreter for about a week for its organization and programming style; and on Sunday, August 27, 1989, at about four o'clock in the afternoon, wrote the first line of code that became the implementation described in this document.

The essay then goes on to quote Whitney's one-page interpreter fragment:

typedef char C;typedef long I;

typedef struct a{I t,r,d[3],p[2];}*A;

#define P printf

#define R return

#define V1(f) A f(w)A w;

#define V2(f) A f(a,w)A a,w;

#define DO(n,x) {I i=0,_n=(n);for(;i<_n;++i){x;}}

I *ma(n){R(I*)malloc(n*4);}mv(d,s,n)I *d,*s;{DO(n,d[i]=s[i]);}

tr(r,d)I *d;{I z=1;DO(r,z=z*d[i]);R z;}

A ga(t,r,d)I *d;{A z=(A)ma(5+tr(r,d));z->t=t,z->r=r,mv(z->d,d,r);

R z;}

V1(iota){I n=*w->p;A z=ga(0,1,&n);DO(n,z->p[i]=i);R z;}

V2(plus){I r=w->r,*d=w->d,n=tr(r,d);A z=ga(0,r,d);

DO(n,z->p[i]=a->p[i]+w->p[i]);R z;}

V2(from){I r=w->r-1,*d=w->d+1,n=tr(r,d);

A z=ga(w->t,r,d);mv(z->p,w->p+(n**a->p),n);R z;}

V1(box){A z=ga(1,0,0);*z->p=(I)w;R z;}

V2(cat){I an=tr(a->r,a->d),wn=tr(w->r,w->d),n=an+wn;

A z=ga(w->t,1,&n);mv(z->p,a->p,an);mv(z->p+an,w->p,wn);R z;}

V2(find){}

V2(rsh){I r=a->r?*a->d:1,n=tr(r,a->p),wn=tr(w->r,w->d);

A z=ga(w->t,r,a->p);mv(z->p,w->p,wn=n>wn?wn:n);

if(n-=wn)mv(z->p+wn,z->p,n);R z;}

V1(sha){A z=ga(0,1,&w->r);mv(z->p,w->d,w->r);R z;}

V1(id){R w;}V1(size){A z=ga(0,0,0);*z->p=w->r?*w->d:1;R z;}

pi(i){P("%d ",i);}nl(){P("\n");}

pr(w)A w;{I r=w->r,*d=w->d,n=tr(r,d);DO(r,pi(d[i]));nl();

if(w->t)DO(n,P("< ");pr(w->p[i]))else DO(n,pi(w->p[i]));nl();}

C vt[]="+{~<#,";

A(*vd[])()={0,plus,from,find,0,rsh,cat},

(*vm[])()={0,id,size,iota,box,sha,0};

I st[26]; qp(a){R a>='a'&&a<='z';}qv(a){R a<'a';}

A ex(e)I *e;{I a=*e;

if(qp(a)){if(e[1]=='=')R st[a-'a']=ex(e+2);a= st[ a-'a'];}

R qv(a)?(*vm[a])(ex(e+1)):e[1]?(*vd[e[1]])(a,ex(e+2)):(A)a;}

noun(c){A z;if(c<'0'||c>'9')R 0;z=ga(0,0,0);*z->p=c-'0';R z;}

verb(c){I i=0;for(;vt[i];)if(vt[i++]==c)R i;R 0;}

I *wd(s)C *s;{I a,n=strlen(s),*e=ma(n+1);C c;

DO(n,e[i]=(a=noun(c=s[i]))?a:(a=verb(c))?a:c);e[n]=0;R e;}

main(){C s[99];while(gets(s))pr(ex(wd(s)));}

A+

The A+ website tells the story of the A+ programming language:

A+ is a descendent of the language "A" created in 1988 by Arthur Whitney at Morgan Stanley. At the time, various departments had a significant investment in APL applications and talent, APL being a language well-suited to the manipulation of large arrays of numbers. As technology was moving from the mainframe to distributed systems, there was a search for a suitable APL implementation to run on SunOS, the distributed platform of the period. Not happy with the systems evaluated, Arthur, motivated by management, wrote one geared to the business: large capacity, high performance. He was joined in his efforts as the language took on graphics, systems' interfaces, utility support, and an ever-widening user community. Over the course of the next few years, as the business began to reap tangible value from the efforts, the pieces were shaped into a consistent whole and became A+. The "+" referred to the electric graphical user interface. An A+ development group was formally created in 1992.

A+ soon became the language of choice for development of Fixed Income applications. It offered familiarity to the APL programmers, the advantages of an interpreter in a fast-paced development arena and admirable floating-point performance. A significant driver was that many of Morgan Stanley's best and brightest were the developers and supporters of the language. Through their practical application of technical values, they instilled fervent enthusiasm in talented programmers, regardless of their programming language backgrounds.

My big break was in 1988 when I joined Morgan Stanley. There the motivation was a terabyte of TIC (Treasury International Capital) data, and back then there were a few million transactions a day being processed by realtime trading systems. I think we had one of the biggest trading operations in the world. We had a portfolio that was a billion dollars: half a billion long, half a billion short. We were trading every second electronically. The data set was a terabyte, but we compressed it down. It was pairs trading, and I wrote an APL to do all of that—the big database and the realtime trading—so our entire department was using the language.

I much preferred implementing and coding in LISP, but once I was dealing with big data sets and then having to do fairly simple calculations, APL just seemed to have the better vocabulary.

It had to come up one level. Common LISP even then had about 2,000 primitives. I didn't like that. What I liked was the original LISP, which had car, cdr, cons, and cond, but that was too little. Common LISP was way too big, but a stripped-down version of APL was in the middle with about 50 operations. It's about the same size as C. But the thing about tha languages that I implement is that there are no libraries: those 50 operations are it. Everybody builds from there, and the resulting programs are extremely short.

FD

On 2 July 2018, First Derivatives plc announced that it was to buy out minority Kx Systems shareholders:

FD (AIM:FDP.L, ESM:DFP.I) announces that it has reached agreement with the minority shareholders of Kx Systems, Inc. ("Kx Systems"), a subsidiary of the Group, regarding the acquisition by FD of their entire remaining shareholding (the "Transaction"). Upon completion of the Transaction, which is expected to take place on or before 29 June 2019, FD will own 100% of the issued share capital of Kx Systems.

Terms of the Transaction

FD has agreed to acquire the remaining 600,022 Kx Systems shares that it doesn't already own from the minority shareholders, namely Arthur Whitney and Janet Lustgarten, who are co-founders and current directors of Kx Systems, and their associated persons. The aggregate consideration is $53.8m in cash, to be financed from FD's available facilities. The terms of the transaction are in line with those agreed between FD and the minority shareholders in October 2014, and include a payment of $12.0m in lieu of anticipated dividends to the minority shareholders for the period up to 31 October 2021.

Brian Conlon, Chief Executive Officer of FD, commented: "Since we acquired a controlling interest in Kx Systems in October 2014 we have invested heavily across our business to target multiple new industries. The agreement to acquire 100% of Kx Systems provides certainty for the Group and its shareholders as we seek to realise the considerable potential we see for further growth."

Shakti

On 27 November 2018 Sarah Butcher wrote an article in eFinancialCareers entitled The new data platform from the reclusive genius of banking IT:

If you work in financial technology, you should know the name Arthur Whitney. He's the Canadian computer scientist who invented the A+ programming language at Morgan Stanley in the late 1980s. He's also the guy that founded Kx Systems, the data analysis company now owned by First Derivatives, which runs the Kdb databases that underpin most algorithmic trading systems today. Now Whitney—who has been described as having "programming powers beyond the ken of mere mortals"—is back with a newer and better data platform. But he isn't talking to anyone about it.

Released last month, Whitney's new creation is called Shakti (after the name for primordial cosmic energy in Hinduism). According to Whitney, Shakti boasts a level of sophistication that will make it, "a new standard for data storage and analysis within financial services and beyond."

Whitney is reclusive these days and doesn't talk to the press, so Shakti's New York-based director of engineering (and ex-Nomura and Barclays kdb specialist) Fintan Quill is flying the flag in public. "High-data capture rates, combined with low-latency query response times make Shakti the perfect platform for trade signal calculation, pre/post-trade risk, real-time surveillance, back-testing among other things," enthuses Quill.

The new platform is also optimal for cloud computing: "The small memory footprint allows for fast deployment and processing of distributed elastic workloads." It can work with all kinds of datasets, including numerical, temporal and text data, whether structured or not.

Shakti's secret is parallelism, which enables it to work faster than predecessors. Parallelism is built into most of its primitive functions and can expand to multiple jobs and machines via what Quill describes as, "a custom-built interprocess communication protocol as well as a native fork-execution model."

These allow it to function in highly distributed high performance computing (HPC) workloads, while single instruction, multiple data (SIMD) technology allows Shakti to function within the 256 or 512-bit register widths of most modern computers. As a result (says Quill), the platform boasts a combination of low cost and very high performance.

If you think you might want to work with Shakti, it will help to be proficient at programming in Python—The system comes with a Python interface that merges those two interpreters into a single process. "This allows Python developers access to the extremely fast data-handling capabilities of Shakti," says Quill, who says the interface means there's "zero-copy" between the two systems, which reduces processing time.

Shakti is very new and it's not clear who—if anyone—has bought into it already. But after a display of its abilities in London and New York in the past six months, the platform may yet get some takers. Last week, it added a COO, Abby Gruen, who joined from Kx.

Quill suggests that any system involving Arthur Whitney should not go ignored. Morgan Stanley, after all, is still using A+ years after Whitney left. "The simple yet intelligent ideas underlying the APL-derived languages inspired by Ken Iverson have shown the robustness to pass the test of time," says Quill. Shakti is only just starting out, but Whitney's history suggests it might be around for a while to come.